Animating a MetaHuman in UE4 requires a motion capture solution (or pre-recorded animation assets for a humanoid rig) and a basic knowledge of keyframe animation. Once you're comfortable with the process of retargeting the movement data onto the standard MetaHuman rig, it’s simple to repeat for your other characters. So, whether you're looking to create your own video game or want to render your VR/AR characters in real-time, read on to learn how to get your MetaHuman moving!

What is the Metahuman Creator?

Epic Games’ Metahuman Creator is a browser-based software that allows users to create ultra-realistic digital humans. Using advanced 3D scans of real humans, this powerful program gives you the power to create complete human models that look impressively lifelike. The software was released in beta in 2021 and is currently free to use for anyone who applies for early access.

Creating your MetaHuman is like creating a character avatar in a video game. You can customize your digital human’s in every detail using a series of sliders and morph tools. Every model is fully textured and rigged for animation by default. Clothing and hair are also included. There are a few big advantages to using the MetaHuman Creator to develop your characters. Specifically, the native integration with Unreal Engine, automatic animation-ready rigs, and simple polygon optimization will save you tons of time when developing games, digital doubles, and virtual productions.

MetaHumans models are a huge time saver for game developers

MetaHumans are the perfect starting point for realistic game characters. In minutes you can fiddle with a few sliders to get the look you want and export a base human avatar that’s ready to customize. For background characters, customization can be minimal. In the case of hero characters, developers will need to add texturing for characteristics such as scars and modeling for world-specific clothing or accessories.

This method is far more efficient than creating an equivalent human character from scratch (a task that can take animators hundreds of hours). It’s also far more cost-effective than buying a ready-made asset, as you can create an unlimited number of MetaHumans for free. In a nutshell, if you combine unreal engine, MetaHuman Creator, and a few hours to tweak it all, MetaHumans can significantly reduce the time and effort studios, and indie devs, spend on producing realistic characters for video games.

Why use MetaHumans for film, virtual productions, and previsualization

While the average MetaHuman character can’t yet take the place of a real human being, they still have their place on the big screen. Some great ways people are using MetaHumans are to:

- Quickly create digital doubles for stunts, previsualization, or other purposes

- Assist in visualizations for virtual productions or populate VR stages

- Populate high-quality background characters for crowds

With the addition of motion capture, it’s a rapid way to get a 3D character moving either as a placeholder or a storyteller.

Can you animate in MetaHuman Creator?

Nope, thanks to the integration with Unreal Engine, there’s no need to learn how to animate outside of your regular software. Any character made in the Creator is automatically uploaded into Unreal’s asset management system, Quixel Bridge. Downloading and uploading your character asset into Unreal for animation presents zero compatibility issues. While Unreal has some great animation tools, you can also use software like Autodesk Maya to record or keyframe animation and then import the animation file into Unreal. If you do this, you’ll need to retarget the data using a free plugin like this one from Rokoko.

You can try manual keyframe animation to animate in Unreal Engine, but we suggest motion capture as the most efficient option for body animation. To do motion capture, you’ll need some kind of motion capture solution (for full-body movement, you might need a mocap suit, mocap gloves, and facial tracker) or a library of mocap assets that you can retarget.

Pro tip: Download Rokoko Studio for free, and you can get 100 free motion capture animation assets from the Rokoko Motion Library.

How to use motion capture to animate a MetaHuman in Unreal Engine

What keyframe animators do in days, motion capture can do in minutes. Don’t believe us? We created a fully animated music video starring a MetaHuman in just 12 hours using mocap. Check it out:

It’s worth repeating that a motion capture suit will only record body movement data. You’ll need additional tools/hardware to record fine motor movement (e.g. these Rokoko Smartgloves specifically record finger and hand movement only). You’ll also need other tools for facial motion capture — more on that in the next section.

To get your MetaHuman moving in Unreal, you’ll either need to:

- Download the animation from an asset marketplace and import it into Unreal.

- Record the animation using motion capture software, save it as an fbx, and import it into Unreal.

- Livestream the animation data directly onto your MetaHuman model in Unreal Engine. From here, you could record looped sequences, record a live performance, or even live stream to your Twitch or YouTube audience.

Retargeting recorded motion capture in UE4

Because every MetaHuman has a standard rig, this is pretty simple once you know how to do it. Here’s a video tutorial on our retargeting workflow inside Unreal using a short clip. You’ll also learn how to create a looped animation from an asset.

Recording & live streaming your own motion capture into UE4

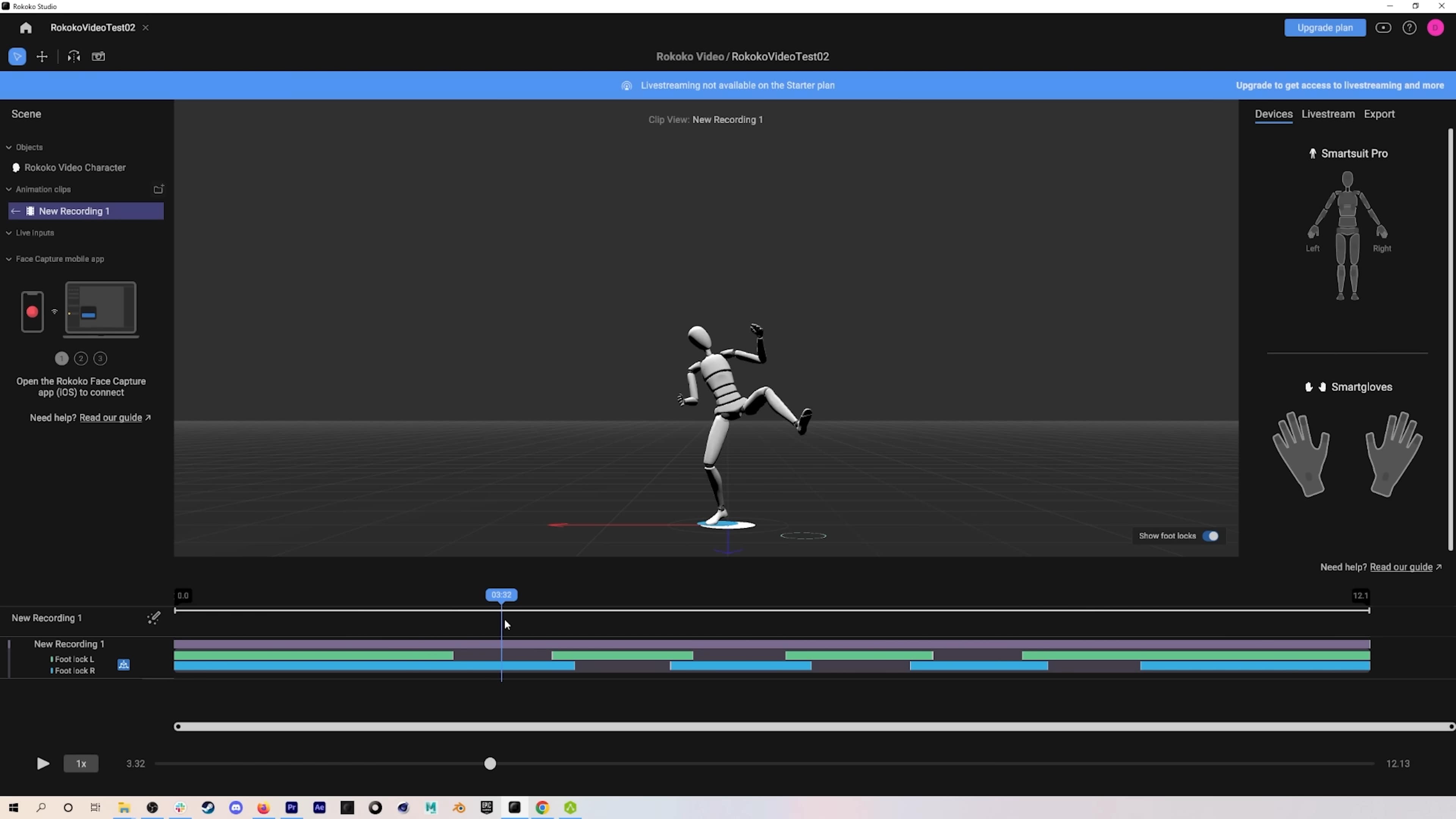

After linking everything up in Rokoko Studio, you can live stream the animation to Unreal or record the animation data and import it as an fbx. Both require a very similar workflow and are relatively straightforward. Check out the tutorial below. (Note: This process depends heavily on the mocap solution you’ve chosen, so it may be different if you’re not using Rokoko.)

How to give your MetaHuman lifelike facial animations

To get your MetaHuman walking AND talking, you will have to add facial tracking software into the mix. While optical tracking from multiple specialist cameras can produce fantastic results, it’s expensive and time-consuming. Facial capture apps like Rokoko Face Tracking for iOS provide more approximate animation but are far more affordable. Options that use marker tracking or specialized camera equipment like the facial mocap recorded below are best suited for larger budgets.

If you’ve got an iPhone X or higher, you can do facial mocap at home. Facial tracking apps rely on Apple’s nifty ARKit blendshapes and TrueDepth Camera to accurately track your facial features and output animation data for facial expressions. Using Rokoko Remote in conjunction with our other motion capture solutions, you can retarget captured animation directly to your MetaHuman in Unreal — all in real time. Alternatively, you can record the animation data in Rokoko Studio, export it as an FBX file to your animation software (e.g. Unreal, Maya. Blender etc.), tweak the animation as you see fit and import it into Unreal Engine using the standard method.

Where to find motion capture assets for your MetaHuman

There’s no need to animate by hand or invest right away in motion capture tech. There are hundreds of pre-recorded mocap assets available to download for free. These assets cover all the basic motions of what a video game character might need, plus some fun extra. Rokoko Studio is free to download and has the world's largest library of motion capture assets. When you first sign up, you’ll get to choose your first 100 assets for free.

Read more inspiring stories

Book a personal demonstration

Schedule a free personal Zoom demo with our team, we'll show you how our mocap tools work and answer all your questions.

Product Specialists Francesco and Paulina host Zoom demos from the Copenhagen office