How to record facial motion capture with your iPhone at a professional level

Gone are the days of animating your CG characters keyframe by keyframe. Facial motion capture is now accessible to you — no matter your experience with 3D face animation. Motion capture can help you speed up almost any animation project, jot down previs in minutes, create animations for video games, and even live stream yourself as a 3D avatar (see VTubing). And here’s the kicker: It’s not that expensive. With an iPhone, an app, and 3D software, you can start recording facial motion capture within the next hour — all with free plugins or software trials.

How to record accurate facial expressions

In the past, facial expressions were recorded via complex tracking markers and optical cameras. It’s how they morphed Benedict Cumberbatch’s incredible performance onto the CG dragon Smaug in the Hobbit trilogy. While entertaining, the expression tracking no longer requires polka dots on your actor's face. While some studios still practice this tracking marker technique today, boutique or indie teams often find this type of motion capture too expensive, slow, and labor-intensive. Enter the humble iPhone. With Apple’s software intricately understanding what makes a face a face, and the added motion data of how millions of human faces move, you can now track facial expressions onto a 3D character without issue.

What you need for facial motion capture:

- An iPhone X (higher iOs devices will work)

- An iPhone mount

- Rokoko Face Capture app

- Rokoko Studio

- Maya, C4D, Unreal, Blender, or any mainstream 3D program.

Why use an iPhone for face mocap?

The iPhone X and higher have the following software and hardware that you need for facial mocap. While Android is catching up, the iPhone has been ahead of the game for longer, so most motion capture apps are designed around it. You can still transfer the tracked data to a Windows PC without issue if you choose. Here’s what enables facial motion capture on the iPhone X and up:

Truedepth Camera: As the name suggests, this single camera can mathematically measure your facial features in 3D space (and not just as a flat 2D image).

Apple’s ARKit facial performance capture: The ARKit’s new features use standard blendshapes to identify key facial expressions and interpolates between them. There are 52 blendshapes in total. Alternatively, you can use Polywig’s excellent automatic service, which generates the blendshapes for your custom 3D head models. They can also assist you with a full facial rig containing 256 blendshapes for finer keyframe animation.

How to record and apply animation data for your facial animations

This general workflow works for most popular 3D software such as Blender, Maya, C4D, and Unity. You can find our full library of video tutorials here.

- Step 1: Get yourself an iPhone and position it with the camera is pointing straight at your face (not at an up or down angle). A phone mount allows your actor to move around during their performance without concern for head rotation issues (and it’s what we use at Rokoko). However, if you want relatively calm animations, you can just set up your phone somewhere secure without purchasing any add-ons.

- Step 2: Download the Rokoko Remote facial capture app. You’ll also need to grab Rokoko Studio — which is free to use — to record the animation data. If you’re planning on live-streaming directly into your 3D software, download one of these free plugins.

- Step 3: Make sure all your character’s faces have the 56 necessary blendshapes before trying to retarget mocap data. Do a quick double-check to ensure the naming convention is standard according to Apple’s documentation. You’ll want your geometry to be clean, with zero intersections. Eyebrows, ears, and hair should all be combined into one mesh.

- Step 4: Open up Rokoko Studio and the Rokoko Face Capture app to pair them together. Here’s a quick tutorial that teaches you how to get the best result.

And there you have it! All your facial motion capture animation data is now available to record and export.

What about a real time facial capture system?

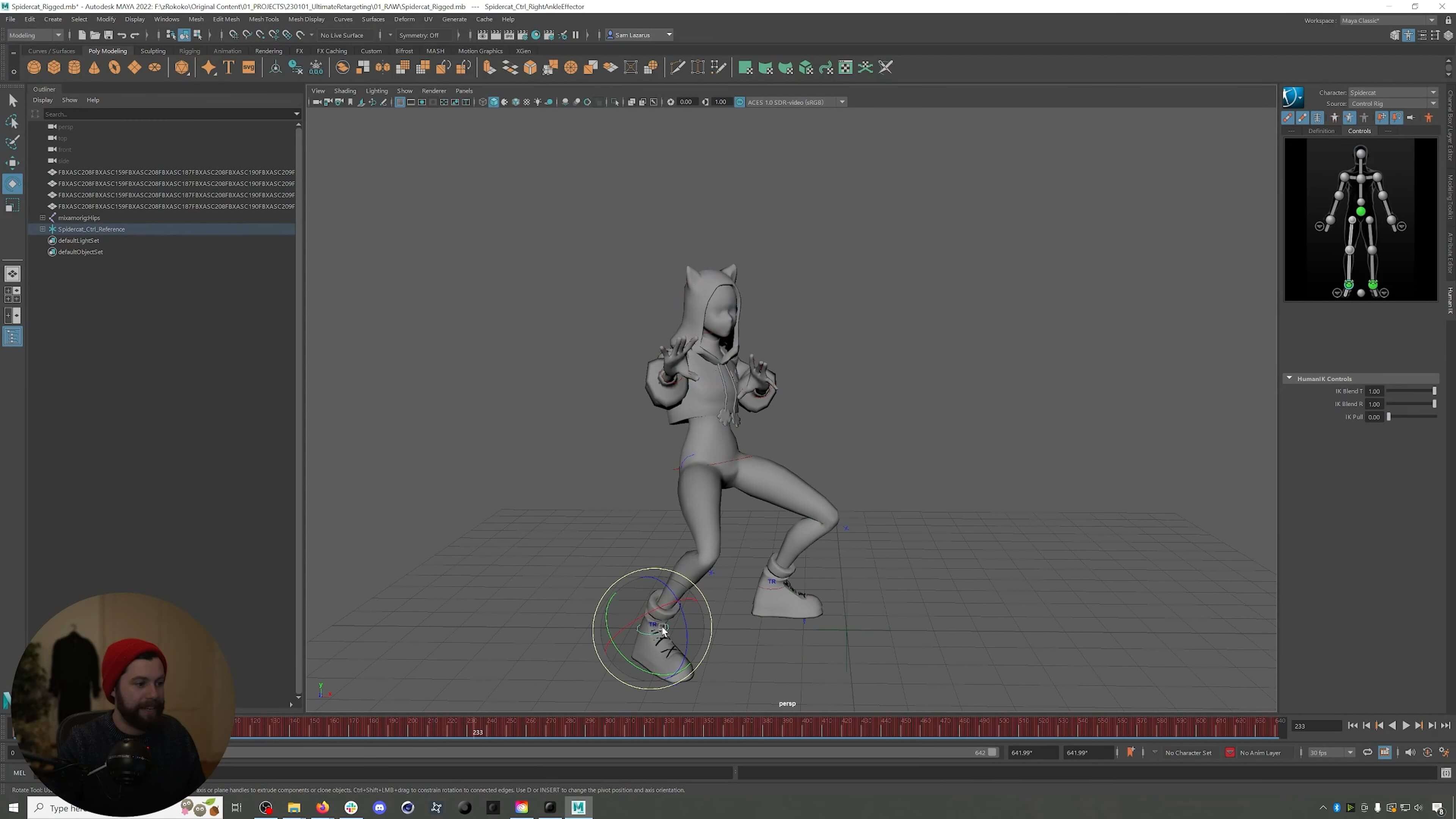

Real-time facial motion capture is easy as pie within most popular 3D animation software. All you need to do is download Rokoko’s retargeting plugin and retarget the animation data live. Here’s a tutorial that shows you how to achieve that with Blender.

And if you want to take it a step further, you can also Livestream your animated character to your twitch channel or anywhere, really. Check out the tutorial below to learn how to Livestream using a game engine like Unity or Unreal.

How to add in full-body motion capture with facial motion capture

Don’t hold your breath, because it’s pretty easy. The workflow for importing full-body motion capture into your scene is quite similar to the workflow we’ve described so far. There are a few minor hardware changes, but your software stays the same:

- You’ll need a motion capture suit (like this one)

- You have to get additional gloves for finger animation

- There’s no camera necessary — the suit records using inertial sensors

Check out the tutorial below to learn the workflow in Cinema 4D.

Get started with facial motion capture

You don’t need any special equipment for facial animations. In an hour or so, you can download the software and start recording for your project right away. Check it out in more detail.

Frequently asked questions

Book a personal demonstration

Schedule a free personal Zoom demo with our team, we'll show you how our mocap tools work and answer all your questions.

Product Specialists Francesco and Paulina host Zoom demos from the Copenhagen office